UML Architecture: Difference between revisions

(New page: This article describes the Universal Machine Language runtime architecture. == Machine Architecture == At its heart, the Universal Machine Language describes an abstract, primarily 32-bi...) |

No edit summary |

||

| Line 24: | Line 24: | ||

One of the primary features of a dynamic recompiler is its ability to cache and quickly recall already-translated code. Because of this, the concept of a '''code cache''' is central to the UML. The code cache not only contains all the generated code, along with the necessary hash tables to find it, but it also serves as a general heap for any data referenced by the generated code. Memory can be allocated from the cache and thus kept in the vicinity of the code that is likely to reference it. On many architectures, memory that is close to the code can be more efficiently accessed, so it is important to make good use of the memory management provided by the cache. | One of the primary features of a dynamic recompiler is its ability to cache and quickly recall already-translated code. Because of this, the concept of a '''code cache''' is central to the UML. The code cache not only contains all the generated code, along with the necessary hash tables to find it, but it also serves as a general heap for any data referenced by the generated code. Memory can be allocated from the cache and thus kept in the vicinity of the code that is likely to reference it. On many architectures, memory that is close to the code can be more efficiently accessed, so it is important to make good use of the memory management provided by the cache. | ||

The cache is created by the dynamic recompiler at initialization time. The size of the cache is fixed once it is created, so it is important to create a cache that is large enough to hold a typical translated working set. If the cache is too small, then code will be flushed from it relatively quickly, and your CPU usage will increase because you are spending extra time to re-translate code that could have been executed from the cache. | |||

[[Image:cache.png]] | [[Image:cache.png]] | ||

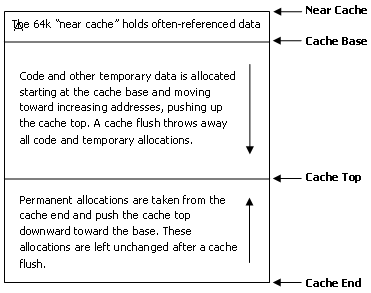

The cache is divided into three sections. The topmost section is known as the '''near cache''' and is a fixed size (64k). The near cache is where frequently-accessed data should be stored. Generally this includes the current architectural state of the CPU that is being emulated, along with tables or other data that is frequently accessed by the UML code. It is also important to realize that many UML opcodes support using memory locations as parameters, but only if those memory locations are within the near cache. | |||

The bottommost section of the cache is where permanent memory allocations are taken from. Data structures that are used and re-used throughout the lifetime of the dynamic recompiler are allocated here. When memory is allocated from this section, the '''cache end''' is moved downward, reducing the amount of free space in the cache. Although memory that has been allocated from this section can be freed, it does not affect the position of the cache end. Rather, that data is kept in a free list and re-used for the next memory allocation of a similar size. | |||

The middle section of the cache is where the most action is. This is where all temporary memory allocations and code generation takes place. It starts at the '''cache base''', which is simply fixed at the end of the near cache, and can expand as far as the cache end, which is where the permanent memory allocations lie. The '''cache top''' represents the position within this region where the next code will be generated or the next block of memory allocated. As code is generated and added to the cache, the cache top moves forward until it reaches the cache end. When that happens, the cache is flushed. A flush simply resets the cache top back to the cache base, effectively throwing away everything that has accumulated in this middle section and starting over. | |||

Although it could be argued that there might be value in keeping some frequently-used cached code around when running out of space, in practice it is not worth the extra bookeeping necessary to make that determination. The dynamic recompiler and back-end should operate relatively quickly, making the performance hit of regenerating the code minimal. | |||

== Code Generation == | == Code Generation == | ||

Revision as of 19:39, 14 May 2008

This article describes the Universal Machine Language runtime architecture.

Machine Architecture

At its heart, the Universal Machine Language describes an abstract, primarily 32-bit computer architecture. It has been designed with several goals in mind:

- dynamic recompilers should be able to express common operations simply

- 64-bit integer operations should be supported, even if they are not preferred

- creating x86 and PowerPC back-ends (both 32-bit and 64-bit) should be relatively straightforward

- a back-end written in a high-level language such as C should have reasonable performance

In addition to a collection of opcodes, described below, the Universal Machine Language also describes an abstract runtime architecture with several basic requirements:

- 10 64-bit integer registers (i0-i9)

- 10 64-bit floating point registers (f0-f9)

- 10 32-bit "map variables" (m0-m9) which map values onto sections of code

- 5 flag bits that can be optionally set on most instructions

- 1 internal exception parameter register

- a 16-entry call stack for subroutine and exception handling

Because each back-end targets a different final CPU architecture, these abstract requirements may not map perfectly; however, it is the job of the back-end code generator to provide an implementation that fully supports all of these requirements. For example, there may not be enough free actual system registers to hold 10 64-bit values, so some of those registers may be implicitly converted by the backend into memory references. More details on how to provide these abstractions will be available in the Back-End Author's Guide.

Code Cache

One of the primary features of a dynamic recompiler is its ability to cache and quickly recall already-translated code. Because of this, the concept of a code cache is central to the UML. The code cache not only contains all the generated code, along with the necessary hash tables to find it, but it also serves as a general heap for any data referenced by the generated code. Memory can be allocated from the cache and thus kept in the vicinity of the code that is likely to reference it. On many architectures, memory that is close to the code can be more efficiently accessed, so it is important to make good use of the memory management provided by the cache.

The cache is created by the dynamic recompiler at initialization time. The size of the cache is fixed once it is created, so it is important to create a cache that is large enough to hold a typical translated working set. If the cache is too small, then code will be flushed from it relatively quickly, and your CPU usage will increase because you are spending extra time to re-translate code that could have been executed from the cache.

The cache is divided into three sections. The topmost section is known as the near cache and is a fixed size (64k). The near cache is where frequently-accessed data should be stored. Generally this includes the current architectural state of the CPU that is being emulated, along with tables or other data that is frequently accessed by the UML code. It is also important to realize that many UML opcodes support using memory locations as parameters, but only if those memory locations are within the near cache.

The bottommost section of the cache is where permanent memory allocations are taken from. Data structures that are used and re-used throughout the lifetime of the dynamic recompiler are allocated here. When memory is allocated from this section, the cache end is moved downward, reducing the amount of free space in the cache. Although memory that has been allocated from this section can be freed, it does not affect the position of the cache end. Rather, that data is kept in a free list and re-used for the next memory allocation of a similar size.

The middle section of the cache is where the most action is. This is where all temporary memory allocations and code generation takes place. It starts at the cache base, which is simply fixed at the end of the near cache, and can expand as far as the cache end, which is where the permanent memory allocations lie. The cache top represents the position within this region where the next code will be generated or the next block of memory allocated. As code is generated and added to the cache, the cache top moves forward until it reaches the cache end. When that happens, the cache is flushed. A flush simply resets the cache top back to the cache base, effectively throwing away everything that has accumulated in this middle section and starting over.

Although it could be argued that there might be value in keeping some frequently-used cached code around when running out of space, in practice it is not worth the extra bookeeping necessary to make that determination. The dynamic recompiler and back-end should operate relatively quickly, making the performance hit of regenerating the code minimal.

Code Generation

UML code is generated in blocks. A block of UML code is defined to be self-contained. That is, all local jumps within the code are resolved, and all calls or jumps to code outside of the block are performed via either handles or hashes. This implies that the first instruction of a block must be either a HANDLE or a HASH opcode, and also implies that the final instruction of a block should be either a RET or a HASHJMP opcode. The specific behaviors of the opcodes are described later.

A code handle is a globally accessible reference to a block of code. In practice, a handle is allocated from the near cache and contains