UML Architecture: Difference between revisions

No edit summary |

No edit summary |

||

| (37 intermediate revisions by the same user not shown) | |||

| Line 39: | Line 39: | ||

== Code Generation == | == Code Generation == | ||

UML code is generated in '''blocks'''. A block of UML code is defined to be self-contained. That is, all local jumps within the code are resolved, and all calls or jumps to code outside of the block are performed via either '''handles''' or '''hashes'''. | UML code is generated in '''blocks'''. A block of UML code is defined to be self-contained. That is, all local jumps within the code are resolved, and all calls or jumps to code outside of the block are performed via either '''code handles''' or '''code hashes''', which are described below. In general, a code block is either a subroutine or a translated sequence of code. The dynamic recompiler generates the block one instruction at a time using helper functions and macros provided by the UML system, which in response encodes the instruction opcodes and parameters into a sequence of structures. Once a block is complete, the dynamic recompiler notifies the UML system, who takes the list of structures and hands it off to the back-end to perform final translation. | ||

A | One potential problem is that during code generation, the back-end may run out of space in the cache. When this happens, the cache needs to be flushed, and whatever was being generated needs to be regenerated from scratch. To accomplish this, the UML makes use of setjmp/longjmp. Before a block is started, the dynamic recompiler performs a setjmp and passes the jump buffer to the UML. If at any time the cache runs out of space, the UML performs a longjmp back to the starting point, which is responsible for flushing the cache and starting the codegen over again. | ||

== Code Flow == | |||

Becase the details of back-end code generation are abstracted, code flow becomes a little tricky, since the addresses of the code you wish to jump to are not known until the back-end translates to the final code. To remedy this, the UML introduces three concepts: code handles, code hashes, and code labels. | |||

A '''code handle''' is a globally accessible reference to a block of code. In practice, a code handle is allocated from the near cache by the dynamic recompiler and contains a pointer to the generated code provided by the back-end. When first allocated, a code handle is empty, since the back-end hasn't had a chance to generate the final code yet. Similarly, when the cache is flushed, all code handles are automatically reset to their empty state, since any code they referenced has been jettisoned. During back-end code generation, when a '''HANDLE''' opcode is encountered, the back-end will fill in the code handle's code pointer with the current cache top, which is where subsequent code will be generated. | |||

To execute code referenced by a handle, the dynamic recompiler calls the UML from C code, passing in the handle where it should begin execution. A pointer to the generated code for this handle is extracted from the handle and then the back-end is called to begin execution. UML code can also make subroutine calls to handle-based code via the '''CALLH''' opcode, or it can invoke handle-based code to handle an exception via the '''EXH''' opcode. | |||

A '''code hash''' is a more indirect way to create a global reference to a block of code, more typically used for hopping between blocks of translated code. As its name implies, a code hash is filed away in a hash table or some other structure that is maintained by the back-end code. A code hash is represented by two values: a '''PC''', which is typically the linear address of a block of code, and a '''mode''', which allows further differentiation between code which may live at the same PC but execute in different contexts. During back-end code generation, when a '''HASH''' opcode is encountered, the back-end will take the PC and mode specified in the opcode and create an entry in its hash table pointing to the current cache top. | |||

The only way to execute code referenced in the hash table is via the UML opcode '''HASHJMP''', which accepts a mode and PC, performs the lookup, and either continues execution at the target code entry, or generates an exception if no code exists for that hash entry. | |||

A '''code label''' is a mechanism to handle branches within a block. Code labels can be seen as analagous to labels in a typical assembly language. The primary difference is that the label itself is the UML opcode '''LABEL''' with a 32-bit integer identifier that must be unique within the block. Because the label itself is an opcode, it is easy for the back-end to determine whether a given instruction can be blended with neighboring instructions, since branches can only occur to the label opcodes. Labels are not resolved until back-end code generation, so if you make a mistake and reference an invalid label or forget to define a label, it won't be caught until the block is ended. | |||

Code labels can be branched to via the '''JMP''' instruction, either unconditionally or via one of 16 conditions. | |||

== Opcode Conventions == | |||

Before diving into the details of the opcodes, it is important to understand some general principles and conventions: | |||

'''Integer Registers'''. There are 10 integer registers, each 64-bits wide. The same registers are used for both 32-bit and 64-bit opcodes; however, unlike many real computer architectures, the upper 32 bits are fully undefined when a 32-bit operation is performed. This means you cannot load a 64-bit value, perform a 32-bit operation, and expect the upper 32 bits to be anything in particular when you are finished. | |||

Most back-ends are expected to assign as many integer registers to native integer registers as possible; however, some architectures do not support mapping all 10 registers in this way. Because of this, dynamic recompilers should try to use the first few registers aggressively, only resorting to the later registers where necessary. | |||

The contents of the integer registers are lost whenever an '''EXIT''' opcode is encountered. | |||

'''Floating Point Registers'''. As with the integer registers, there are 10 floating point registers, each 64-bits wide. The same registers are again used for both 32-bit and 64-bit opcodes, and the upper 32 bits are fully undefined when a 32-bit operation is performed. | |||

Floating point registers ''must not'' perform any conversions when loaded/stored/moved. This means that the floating point register set must support holding arbitrary values without performing any implicit conversion. Back-end architectures that cannot meet this requirement (e.g., the Intel x86 FPU), must keep the 10 floating point registers in memory and only convert data when performing arithmetic operations. | |||

Back-end support for floating point registers is often even more limited than support for integer registers, so dynamic recompilers should focus on using the first few registers as much as possible. | |||

The contents of the floating point registers are lost whenever an '''EXIT''' opcode is encountered. | |||

'''Immediates'''. Immediate values can be up to 64-bits wide. Of course, it only makes sense to use immediate values that fit in the size of the opcode. | |||

'''Memory Parameters'''. Memory parameters can be used in most of the places where register parameters are permitted. The memory parameter size is implicitly determined by the opcode. Most importantly, for the majority of instructions, any memory parameters must reside in the near cache. This is to ensure that they can be efficiently accessed on all architectures. The lone exceptions to this rule are the '''LOAD''' and '''STORE''' opcodes, which can be used to access memory anywhere. | |||

'''Map Variables'''. Map variables are constant values that are encoded into the instruction stream. They are used to recover values when a subroutine or exception occurs, based on the caller's address. Map variables are only 32-bits wide and when used in code always translate into immediate values. | |||

'''Flags'''. There are 5 flags defined by the architecture: | |||

* C (bit 0) is the carry flag, and indicates an unsigned carry in arithmetic operations or the shift-out value in rotate/shift operations | |||

* V (bit 1) is the overflow flag, and indicates a signed overflow in arithmetic operations | |||

* Z (bit 2) is the zero flag, and indicates a zero result | |||

* S (bit 3) is the sign flag, and indicates a negative result | |||

* U (bit 4) is the unordered flag, and indicates that a floating point compare had at least one NaN parameter | |||

Opcodes which can affect the flags must specify which flags they care about. Flags that are not explicitly requested are undefined. | |||

'''Conditions'''. Control flow instructions and simple data move instructions support an optional condition, which allows behavior to occur based on the state of the flags. Each flag can be checked for on/off independently. In addition, the usual collection of G/GE/L/LE/A/AE/B/BE conditions are available. | |||

== Opcodes == | |||

For information about particular opcodes, see one of these sections: | |||

* [[UML Control Flow Opcodes]] | |||

* [[UML Internal Register Opcodes]] | |||

* [[UML Integer Opcodes]] | |||

* [[UML Floating Point Opcodes]] | |||

Latest revision as of 22:00, 9 June 2008

This article describes the Universal Machine Language runtime architecture.

Machine Architecture

At its heart, the Universal Machine Language describes an abstract, primarily 32-bit computer architecture. It has been designed with several goals in mind:

- dynamic recompilers should be able to express common operations simply

- 64-bit integer operations should be supported, even if they are not preferred

- creating x86 and PowerPC back-ends (both 32-bit and 64-bit) should be relatively straightforward

- a back-end written in a high-level language such as C should have reasonable performance

In addition to a collection of opcodes, described below, the Universal Machine Language also describes an abstract runtime architecture with several basic requirements:

- 10 64-bit integer registers (i0-i9)

- 10 64-bit floating point registers (f0-f9)

- 10 32-bit "map variables" (m0-m9) which map values onto sections of code

- 5 flag bits that can be optionally set on most instructions

- 1 internal exception parameter register

- a 16-entry call stack for subroutine and exception handling

Because each back-end targets a different final CPU architecture, these abstract requirements may not map perfectly; however, it is the job of the back-end code generator to provide an implementation that fully supports all of these requirements. For example, there may not be enough free actual system registers to hold 10 64-bit values, so some of those registers may be implicitly converted by the backend into memory references. More details on how to provide these abstractions will be available in the Back-End Author's Guide.

Code Cache

One of the primary features of a dynamic recompiler is its ability to cache and quickly recall already-translated code. Because of this, the concept of a code cache is central to the UML. The code cache not only contains all the generated code, along with the necessary hash tables to find it, but it also serves as a general heap for any data referenced by the generated code. Memory can be allocated from the cache and thus kept in the vicinity of the code that is likely to reference it. On many architectures, memory that is close to the code can be more efficiently accessed, so it is important to make good use of the memory management provided by the cache.

The cache is created by the dynamic recompiler at initialization time. The size of the cache is fixed once it is created, so it is important to create a cache that is large enough to hold a typical translated working set. If the cache is too small, then code will be flushed from it relatively quickly, and your CPU usage will increase because you are spending extra time to re-translate code that could have been executed from the cache.

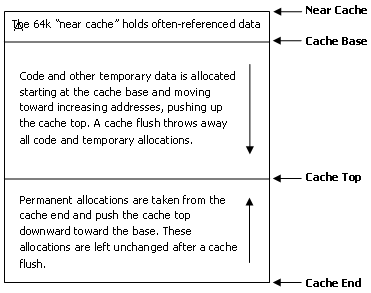

The cache is divided into three sections. The topmost section is known as the near cache and is a fixed size (64k). The near cache is where frequently-accessed data should be stored. Generally this includes the current architectural state of the CPU that is being emulated, along with tables or other data that is frequently accessed by the UML code. It is also important to realize that many UML opcodes support using memory locations as parameters, but only if those memory locations are within the near cache.

The bottommost section of the cache is where permanent memory allocations are taken from. Data structures that are used and re-used throughout the lifetime of the dynamic recompiler are allocated here. When memory is allocated from this section, the cache end is moved downward, reducing the amount of free space in the cache. Although memory that has been allocated from this section can be freed, it does not affect the position of the cache end. Rather, that data is kept in a free list and re-used for the next memory allocation of a similar size.

The middle section of the cache is where the most action is. This is where all temporary memory allocations and code generation takes place. It starts at the cache base, which is simply fixed at the end of the near cache, and can expand as far as the cache end, which is where the permanent memory allocations lie. The cache top represents the position within this region where the next code will be generated or the next block of memory allocated. As code is generated and added to the cache, the cache top moves forward until it reaches the cache end. When that happens, the cache is flushed. A flush simply resets the cache top back to the cache base, effectively throwing away everything that has accumulated in this middle section and starting over.

Although it could be argued that there might be value in keeping some frequently-used cached code around when running out of space, in practice it is not worth the extra bookeeping necessary to make that determination. The dynamic recompiler and back-end should operate relatively quickly, making the performance hit of regenerating the code minimal.

Code Generation

UML code is generated in blocks. A block of UML code is defined to be self-contained. That is, all local jumps within the code are resolved, and all calls or jumps to code outside of the block are performed via either code handles or code hashes, which are described below. In general, a code block is either a subroutine or a translated sequence of code. The dynamic recompiler generates the block one instruction at a time using helper functions and macros provided by the UML system, which in response encodes the instruction opcodes and parameters into a sequence of structures. Once a block is complete, the dynamic recompiler notifies the UML system, who takes the list of structures and hands it off to the back-end to perform final translation.

One potential problem is that during code generation, the back-end may run out of space in the cache. When this happens, the cache needs to be flushed, and whatever was being generated needs to be regenerated from scratch. To accomplish this, the UML makes use of setjmp/longjmp. Before a block is started, the dynamic recompiler performs a setjmp and passes the jump buffer to the UML. If at any time the cache runs out of space, the UML performs a longjmp back to the starting point, which is responsible for flushing the cache and starting the codegen over again.

Code Flow

Becase the details of back-end code generation are abstracted, code flow becomes a little tricky, since the addresses of the code you wish to jump to are not known until the back-end translates to the final code. To remedy this, the UML introduces three concepts: code handles, code hashes, and code labels.

A code handle is a globally accessible reference to a block of code. In practice, a code handle is allocated from the near cache by the dynamic recompiler and contains a pointer to the generated code provided by the back-end. When first allocated, a code handle is empty, since the back-end hasn't had a chance to generate the final code yet. Similarly, when the cache is flushed, all code handles are automatically reset to their empty state, since any code they referenced has been jettisoned. During back-end code generation, when a HANDLE opcode is encountered, the back-end will fill in the code handle's code pointer with the current cache top, which is where subsequent code will be generated.

To execute code referenced by a handle, the dynamic recompiler calls the UML from C code, passing in the handle where it should begin execution. A pointer to the generated code for this handle is extracted from the handle and then the back-end is called to begin execution. UML code can also make subroutine calls to handle-based code via the CALLH opcode, or it can invoke handle-based code to handle an exception via the EXH opcode.

A code hash is a more indirect way to create a global reference to a block of code, more typically used for hopping between blocks of translated code. As its name implies, a code hash is filed away in a hash table or some other structure that is maintained by the back-end code. A code hash is represented by two values: a PC, which is typically the linear address of a block of code, and a mode, which allows further differentiation between code which may live at the same PC but execute in different contexts. During back-end code generation, when a HASH opcode is encountered, the back-end will take the PC and mode specified in the opcode and create an entry in its hash table pointing to the current cache top.

The only way to execute code referenced in the hash table is via the UML opcode HASHJMP, which accepts a mode and PC, performs the lookup, and either continues execution at the target code entry, or generates an exception if no code exists for that hash entry.

A code label is a mechanism to handle branches within a block. Code labels can be seen as analagous to labels in a typical assembly language. The primary difference is that the label itself is the UML opcode LABEL with a 32-bit integer identifier that must be unique within the block. Because the label itself is an opcode, it is easy for the back-end to determine whether a given instruction can be blended with neighboring instructions, since branches can only occur to the label opcodes. Labels are not resolved until back-end code generation, so if you make a mistake and reference an invalid label or forget to define a label, it won't be caught until the block is ended.

Code labels can be branched to via the JMP instruction, either unconditionally or via one of 16 conditions.

Opcode Conventions

Before diving into the details of the opcodes, it is important to understand some general principles and conventions:

Integer Registers. There are 10 integer registers, each 64-bits wide. The same registers are used for both 32-bit and 64-bit opcodes; however, unlike many real computer architectures, the upper 32 bits are fully undefined when a 32-bit operation is performed. This means you cannot load a 64-bit value, perform a 32-bit operation, and expect the upper 32 bits to be anything in particular when you are finished.

Most back-ends are expected to assign as many integer registers to native integer registers as possible; however, some architectures do not support mapping all 10 registers in this way. Because of this, dynamic recompilers should try to use the first few registers aggressively, only resorting to the later registers where necessary.

The contents of the integer registers are lost whenever an EXIT opcode is encountered.

Floating Point Registers. As with the integer registers, there are 10 floating point registers, each 64-bits wide. The same registers are again used for both 32-bit and 64-bit opcodes, and the upper 32 bits are fully undefined when a 32-bit operation is performed.

Floating point registers must not perform any conversions when loaded/stored/moved. This means that the floating point register set must support holding arbitrary values without performing any implicit conversion. Back-end architectures that cannot meet this requirement (e.g., the Intel x86 FPU), must keep the 10 floating point registers in memory and only convert data when performing arithmetic operations.

Back-end support for floating point registers is often even more limited than support for integer registers, so dynamic recompilers should focus on using the first few registers as much as possible.

The contents of the floating point registers are lost whenever an EXIT opcode is encountered.

Immediates. Immediate values can be up to 64-bits wide. Of course, it only makes sense to use immediate values that fit in the size of the opcode.

Memory Parameters. Memory parameters can be used in most of the places where register parameters are permitted. The memory parameter size is implicitly determined by the opcode. Most importantly, for the majority of instructions, any memory parameters must reside in the near cache. This is to ensure that they can be efficiently accessed on all architectures. The lone exceptions to this rule are the LOAD and STORE opcodes, which can be used to access memory anywhere.

Map Variables. Map variables are constant values that are encoded into the instruction stream. They are used to recover values when a subroutine or exception occurs, based on the caller's address. Map variables are only 32-bits wide and when used in code always translate into immediate values.

Flags. There are 5 flags defined by the architecture:

- C (bit 0) is the carry flag, and indicates an unsigned carry in arithmetic operations or the shift-out value in rotate/shift operations

- V (bit 1) is the overflow flag, and indicates a signed overflow in arithmetic operations

- Z (bit 2) is the zero flag, and indicates a zero result

- S (bit 3) is the sign flag, and indicates a negative result

- U (bit 4) is the unordered flag, and indicates that a floating point compare had at least one NaN parameter

Opcodes which can affect the flags must specify which flags they care about. Flags that are not explicitly requested are undefined.

Conditions. Control flow instructions and simple data move instructions support an optional condition, which allows behavior to occur based on the state of the flags. Each flag can be checked for on/off independently. In addition, the usual collection of G/GE/L/LE/A/AE/B/BE conditions are available.

Opcodes

For information about particular opcodes, see one of these sections: